Can I ask you a question, would you prefer to discover something for yourself or be told what you should know?

Choices as to how you want to learn are to a certain extent personal, perhaps even a learning style, but shouldn’t we be asking which is the most effective, and when it comes to that, we have evidence.

The problem is you might not like the results, I’m not sure I do.

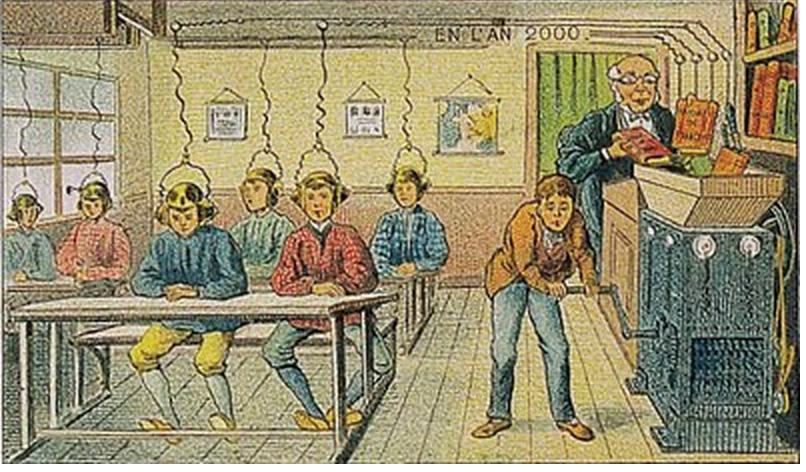

The headline for this month’s blog is not mine but an edited one from John Sweller, of cognitive load fame, in a paper published this August by the Centre for Independent Studies in Australia. Although I have written about some aspects of Inquiry based learning before (IBL), it’s worth taking a closer look, especially given the impact Sweller believes IBL type methods have had in Australia. He suggests that the countries rankings on international tests such as PISA have reduced because of a greater emphasis on IBL in classrooms across the country.

But first…..

What is inquiry-based learning?

Inquiry based learning can be traced back to Constructivism and the work of Piaget, Dewey, Vygotsky et al. Constructivism is an approach to learning that suggests people construct their own understanding and knowledge of the world, through experiencing it and reflecting on those experiences. This sits alongside Behaviourism (see last month’s blog) and Cognitivism to form three important theories of learning.

As a process IBL often starts with a question to encourage students to share their thoughts, these are then carefully challenged in order to test conviction and depth of understanding. The result, a more refined and robust appreciation of what was being discussed, learning has taken place. It is an approach in which the teacher and student share responsibility for learning. There are some slight variations to IBL that include Problem-based learning (PBL), and Project-based learning (PjBL), in these rather than a question being the catalyst, it’s a problem.

This method is intuitively attractive and promoted widely in schools and higher education institutions around the world. Which is what makes Swellers argument so challenging, how can someone “learn better” when they are being told as opposed to discovering the answer for themselves?

What’s wrong with it?

To answer this question, I will quote both Sweller and Richard E Clark who challenged enquiry-based learning fifteen years ago in a paper called, Why Minimal Guidance During Instruction Does Not Work.

Unguided and minimally guided methods… ignore both the structures that constitute human cognitive architecture and evidence from empirical studies over the past half-century that consistently indicate that minimally guided instruction is less effective and less efficient than instructional approaches that place a strong emphasis on guidance of the student learning process.

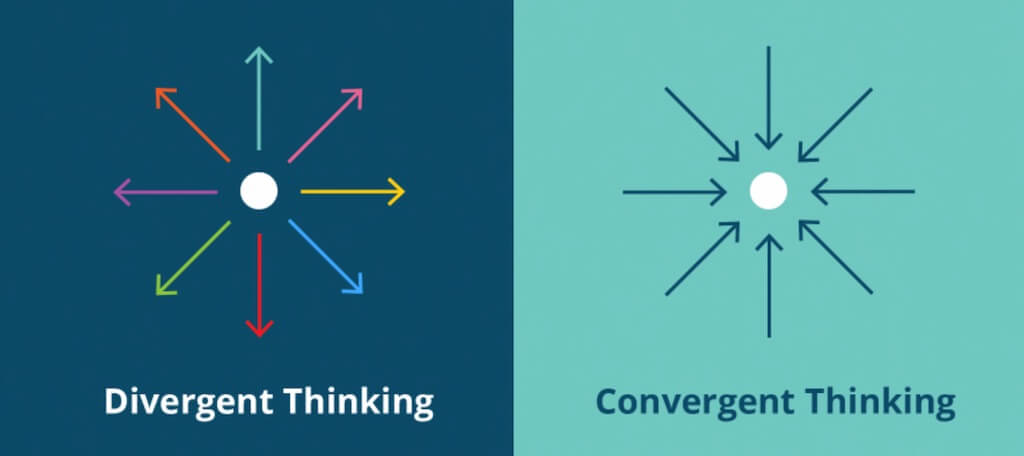

The cognitive architecture they are refereeing to is the limitation of working memory and the need to keep cognitive load to a minimum e.g. 7+-2. In the more recent paper Sweller goes onto explain how the “worked example effect” demonstrates the problems of IBL and the benefits of a more direct instructional approach. If one group of students were presented with a series of problems to solve and another group given the same problems but with detailed solutions, those that had the worked example perform better on future common problem-solving tests.

“Obtaining information from others is vastly more efficient than obtaining it during problem solving“. John Sweller

In simple terms if a student (novice) has to formulate the problem, position it in a way that they can think about it, bring to bear their existing knowledge, challenge that knowledge, the cognitive load becomes far too high resulting in at best weak learning, and at worst confusion.

“As far as can be seen, inquiry learning neither teaches us how to inquire nor helps us acquire other knowledge deemed important in the curriculum.” John Sweller

What’s better – Direct instruction?

Sweller is not simply arguing against IBL, he is comparing it and promoting the use of direct instruction. This method you might remember requires the teacher to presents information in a prescriptive, structured and sequenced manner. Direct Instruction keeps cognitive load to a minimum and as a result makes it easier to transfer information from working to long term memory.

Best of both worlds

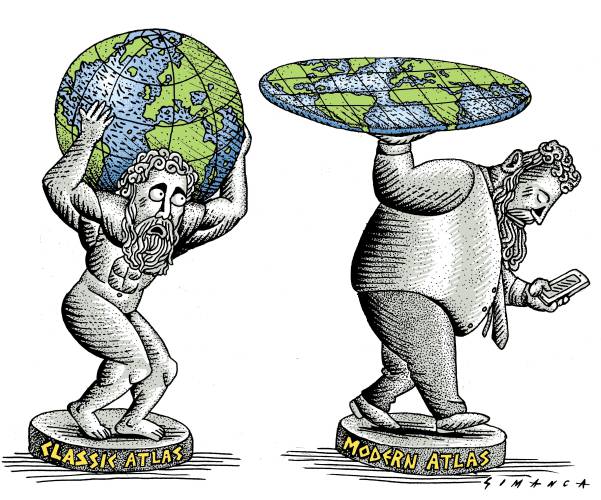

It may be that so far this blog has been a bit academic and does little more than promote direct instruction over IBL, my apologies. The intention was to showcase IBL, clarify what it is and point out some of the limitations. In addition to highlight how easy it is to believe that something must be good because it feels intuitively right. And in that IBL is compelling, we are human and learn from asking questions and solving problems, it’s what we have been doing for thousands of years. But that alone does not make it the best way to learn.

The good news is these methods are not mutually exclusive, and for me John Hattie, coincidentally another Australian has the answer. He says that although IBL may engage students, which can give an illusion of learning, if you are new to a subject (a novice) and have to learn content as opposed to the slightly deeper relationship between content, then IBL doesn’t work. Also, if you don’t teach the content, you have nothing to reason about.

But, there is a place for IBL…..its after the student has acquired sufficient knowledge that they can begin to explore by experimenting with their own thoughts. The more difficult question is, when do you should do this, and that is likely to be different for everyone.

One for another day perhaps.